Context Pollution in LLMs

We are seeing day by day more applications leveraging Large Language Models (LLMs) to not only provide suggestion, but even to generate the full code. Although this is a revolutionary moment in the way we interact with technology, we are still facing important challenges when it comes to the quality of the results provided by these models.

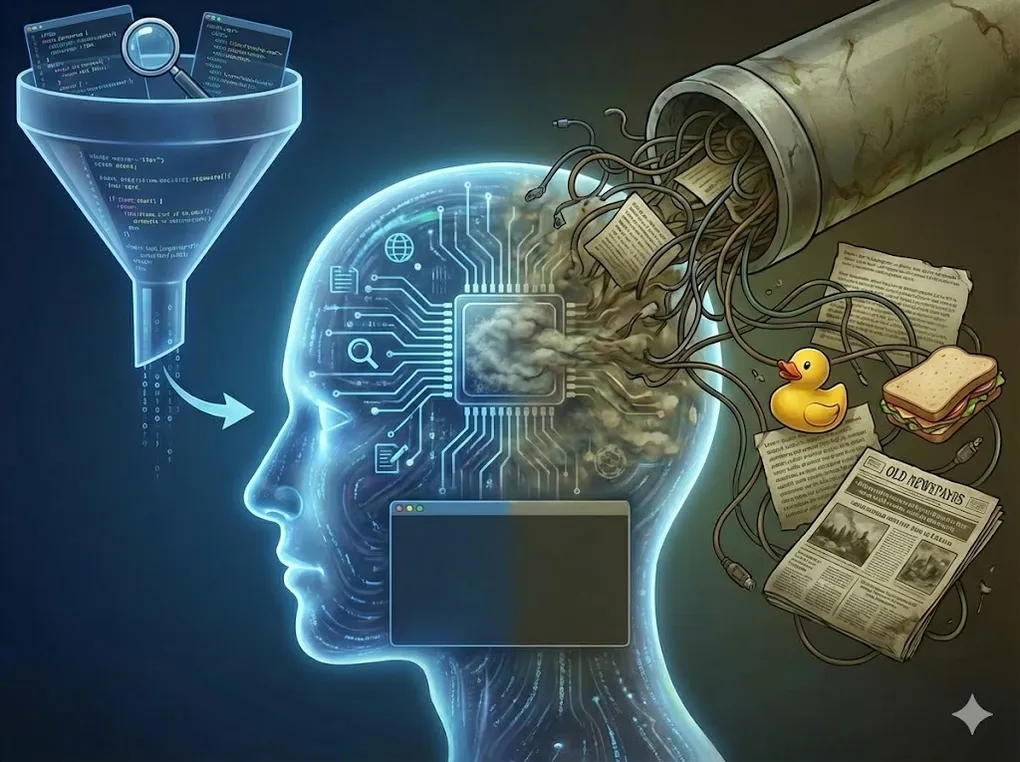

One of the complaints that is becoming more and more common is when the model seems to “lose track” of the original intent of the user, providing code or suggestion far from what was expected. What at the beggining was identified as “the model is hallucinating” is now being tagged as “context pollution”.

What is Context Pollution?

In the past two years, the context window of LLMs has increased significantly, allowing these models to process and understand larger amounts of text. However, as the context window grows, so does the risk of context pollution. Context pollution occurs when instead of focusing on the key concepts relevant to the user’s query, the model gets distracted by irrelevant or extraneous information present in the context data. This can lead to responses that are off-topic, inaccurate, or misleading.

An example:

Imagine a user asking an LLM to generate a function that calculates the factorial of a number. If the context provided to the model includes unrelated information about sorting algorithms, the model might produce a response that mixes concepts from both topics, resulting in a function that does not correctly calculate the factorial.

This phenomenon can be particularly problematic in scenarios where the context includes a large amount of data, such as lengthy documents, codebases, or conversations. The model may struggle to identify which parts of the context are relevant to the user’s query, leading to suboptimal results.

Why Context Pollution Matters

At the beggining, context pollution was seen as a minor inconvenience, but as LLMs are being integrated into more critical applications, the implications of context pollution have become more significant. Inaccurate or misleading responses can lead to errors in decision-making, reduced user trust, and potential harm in applications such as healthcare, finance, and legal services.

The models in agentic-mode are implementing code, instead of just suggesting it. In these scenarios, context pollution can lead to the generation of faulty code, which can have serious consequences if deployed in production environments.

Nowadays developers throw more and more context (thousands and thousands of lines of code) to the LLMs, expecting them to be able to filter out the noise and focus on the relevant information. However, as the amount of context increases, so does the risk of context pollution, making it harder for the model to provide accurate and relevant responses.

How to Mitigate Context Pollution

I am sure that sooner than later, new models will come with a better understanding of context and improved mechanisms to filter out irrelevant information. However, until then, there are several strategies that developers and users can employ to mitigate the effects of context pollution:

- Context Curation: Carefully select and curate the context provided to the model. Remove any irrelevant or extraneous information that may distract the model from the user’s query.

- Prompt Engineering: Design prompts that clearly specify the user’s intent and guide the model towards the relevant information. This can help the model focus on the key concepts and reduce the risk of context pollution.

- Chunking: Break down large contexts into smaller, more manageable chunks. This can help the model process the information more effectively and reduce the likelihood of context pollution.

- Iterative Refinement: Use an iterative approach to refine the model’s responses. Start with a broad context and gradually narrow it down based on the model’s outputs.

- Feedback Loops: Implement feedback mechanisms that allow users to provide input on the model’s responses. This can help identify instances of context pollution and improve the model’s performance over time.

Conclusion

Context pollution is a significant challenge in the use of Large Language Models, particularly as they are integrated into more critical applications. By understanding the causes and implications of context pollution, developers and users can take proactive steps to mitigate its effects and improve the quality of the model’s responses. As the field of AI continues to evolve, it is likely that new techniques and models will emerge to address this issue more effectively, but until then, careful context management and prompt design remain essential tools in the fight against context pollution.